All posts

Posted on

4.6.2025

Skill Assessments: The Key to Personalized L&D

Category:

Research [R&D]

Reading time

10

Minuten

Posted on

Category:

Reading time

10

The future of personalisation in professional online learning lies in detailed, but fast and effective skills assessments. The use of skill assessments not only empowers employees to reach their full potential, but also drives organisations to be more innovative, productive and resilient in an increasingly competitive business environment. In this blog, we will take a closer look at what skill assessments are, the different types of skill assessments, the best practices for conducting them, and why granular assessments are key to success. Stay tuned for invaluable insights into how competency assessment can transform organisational learning and ignite the path to excellence.

In today's dynamic and ever-evolving business landscape, staying competitive is paramount for any organisation striving for success. To achieve this, organisations need to invest in their most valuable asset: their people. As the pace of technological advancement accelerates, traditional approaches to learning and development are rapidly being replaced by more efficient and targeted methods. Among these, skill assessments have emerged as a game-changing tool that is revolutionising the way organisations develop their talent.

The importance of skill assessments in corporate learning cannot be overstated.

A skills assessment is a structured assessment or test designed to measure an individual's knowledge and competence in specific areas of knowledge, expertise or practical skills. Its purpose is to objectively assess an individual's current skill level, identify strengths and weaknesses, and provide valuable data for tailoring targeted learning and development programmes. Skills assessments play a critical role in corporate learning and talent management, helping individuals and organisations to chart personalised growth paths and optimise performance in various professional domains.

The personalization and customization of learning is a hot topic and surely deserves a whole blog post series on its own, so let’s keep it short here. It essentially boils down to the rather obvious anyway:

Everybody is different!

We all bring different personalities, cultures, previous knowledge and experience, wishes, goals, expectations and many more diversities to the table, and this influences the way we live, work and, most important for us, how we learn. Unfortunately, this heterogeneity is not well reflected in most online learning opportunities, which rather try to provide learning content for the broadest possible range of learners.

The unfortunate thing about it?

Most of these trainings and courses follow one-fits-all strategies and do not take into account the different qualifications, needs and learning goals of their participants.

Our mission at edyoucated is to integrate the individual needs of our learners into their learning processes alongside a multitude of dimensions (some of which we are researching in our KAMAELEON project). Here are a few examples:

In this post, we’ll focus on the personalization with respect to previous knowledge.

Why?

Our main goal is to start the learning process individually at the exactly the right place for each learner. Everybody enters the learning process with a different skill set, and we want to recommend the individual best next skills to learn. We call the general information about which skills a learner has already mastered the learner’s knowledge state.

But how can we actually figure out the knowledge state of our learners? The key are assessments!

You may already have experienced knowledge assessments as a part of a job application process, where they are used to screen and compare you to other applicants. It is used to determine the skills you have already mastered and will bring to the new company. But the real strength of assessments usually lies elsewhere:

It is identifying the areas where you are still lacking knowledge or experience!

Simply put, assessments help identify your needs, which can then be used to steer your learning activities and personal development. And they come in very different shapes.

The ones you might be most familiar with from school are formative and summative assessments. The former are taken during your learning process to decide about upcoming learning activities. The latter are evaluations of your competency at the end of a learning unit in order to check your mastery of the topic.

While the discussed assessment types come in form of tests or exams, self-assessments require you to reflect on your knowledge and skills on your own. As a quick check:

How would you rate your self-reflection abilities on a scale from 1 to 10? 🙂

Hard to be precise? This is often the case for self-assessments, even if the questions are more focused. It is difficult and highly subjective to give ratings on a scale, isn’t it?

Yet, self-assessments play a large role when it comes to personal development and the choice of learning activities. Whenever you decide about the next thing to learn, apart from interest, you do it (sub-)consciously: In which area do I need to improve my skills? Where am I already at a higher level?

Many learning recommendation systems adapt the kind of self-assessment described above, which leads to two major problems for the learner. On the one hand, the assessment becomes subjective and difficult to do for the learner. On the other hand, the granularity (think: How skilled are you with Microsoft Excel?) only allows for rather imprecise recommendations; most platforms recommend entire courses. Just like Netflix offering entire series to watch.

But do they actually fit?

Or do you already know the entire beginning and need to skip through it to find the first relevant material? As a remedy, we use what we call atomic assessments.

At edyoucated, we break down learning topics into the most elementary bricks possible, opening up the possibility to recommend and learn skills on a very fine granularity.

How we call them? Skill atoms, of course! What could be smaller?

To give you an example, an atomic skill for Microsoft Excel would not be “Excel Fundamentals”, but instead “Opening and Saving Workbooks” or “Using the Fill Handle”. On this granularity, topics then are broken down not only into a few different bits, but into up to a few hundred. This gives us the opportunity to offer the best next skill for you to learn on a much finer granularity and with much more precision.

Your benefit as learner? You get exactly what you need!

Atomic assessments also solve another problem: The resulting self-assessment questions, for example, “Do you know how to open and save workbooks in Excel?”, can be answered simply with “yes” or “no” instead of requiring a difficult assessment on a scale. And, just like that, the self-assessment is much less subjective and easier to handle.

Still problems? None that we can’t solve.

Nevertheless, while atomic assessments provide about your skills on a fine granularity and enable very precise recommendations, they come at a price. Quite obviously, we need to gather the information about your full current knowledge state first.

And that can turn into a tedious task!

In case of a self-assessment, you would be forced to answer a large amount of questions (worst case: one question per atomic skill!) before being able to start with the actual learning process. In most practical learning situations, this is almost impossible or at least a major limitation.

So our challenge is to figure out which of the atomic skills you have already mastered and which are still unknown to you!

And yes, all of them. Without any further intelligence involved, we’ll have to ask you for every single one. As you can imagine, this can get quite the boring and frustrating task for you as a learner, so we definitely do not want to do this. But at least it can serve as a baseline for our evaluation: Trading one question for one bit of information about you. This is the worst case, and does not create a pleasant user experience, don’t you think?

So let’s start with a simple example to warm up and see how we can improve this. If learners tell us they know how to create pivot tables in Microsoft Excel, how likely do you think it is they know how to enter some numbers in a worksheet as well? Close to 100%, right? Simply because pivot tables are much more advanced than entering content in cells.

So there is an inherent connection between the two atomic skills (pivot tables & content in cells): the mastery of one depends on the mastery of the other. We call the relationship of the atoms in such a situation (where one skill depends on the other) a prerequisite relation. In the language of graphs, Atom A is called an ancestor of Atom B, and Atom B is a descendant of Atom A.

How does this help us?

It’s actually quite simple. Let’s say Atom A is a prerequisite for Atom B (depicted above). Then, if you do not know A, you cannot know B either and we do not have to ask for it anymore! Vice versa, if you have already mastered B, then we know that you must have been familiar with A all along. Great! If we leverage that information during the assessment we can save some questions.

But how many can we actually save? To illustrate this, we have prepared a slightly more complicated situation below, with a couple more atoms and prerequisites. As a start, take a few seconds to ask yourself: Using the prerequisites, which atom would you ask for first to figure out as much information as possible?

Make a mental note of your choice and let us walk through this together. Say, we ask you about your mastery of Atom A and you tell us you don’t know it. Jackpot! Since A is a prerequisite for C, D and E, we can directly deduce that you cannot be familiar with those either. For B we’ll still have to ask, but you can see how nicely this cascades:

We’ve gained 4 bits of information with only one question!

But what if you tell us you do in fact know A? Then we haven’t really gained much, we’ve been trading one question for one bit of information, our worst case. So maybe A isn’t the best atom to ask for as a start.

And we are pretty sure that you’ve figured it out by now. Just verify for yourself that Atom C is actually the best to start with. Why? It gives us 3 bits of information in every case, independent of whether you answer Yes or No. We can either include A and B or exclude D and E from your knowledge state.

For the two remaining atoms of the left subgraph (either A and B or D and E) it’s then a fifty-fifty situation whether we have to ask once or twice more. The same is true for the subgraph containing F and H. And for G we’ll have to ask anyway as it is not connected at all. Following this strategy, we will most likely have to ask 5 questions (or 6 in the worst case) to figure out your knowledge about 8 atoms.

We’ve almost cut the numbers in half, that’s quite something, isn’t it?

Mathematically, the strategy we’ve been following can be described as picking the atom with the highest expected information gain in each step, where we assume that your probability of answering with either Yes or No is 50% in both cases. If you want a formula, the expected information gain for any atom could be the following:

Let’s assume that the number (#) of ancestors and descendants count the atom itself. Then Atom D, for example, would give us an expected information gain of 1(D) = 0.5 * 4 + 0.5 * 2 = 3 , which is ... the same as for Atom C?

Okay, you got us!

Interpreting the expected information gain this way, Atom D is actually just as valuable as C. But we’d argue that C still has an edge over D, as it works equally well if you answer with Yes or No. So let’s say this tie in information gain goes to Atom C nevertheless. And this actually hints us at a way we can optimize.

What if we knew some better a-priori probabilities for you answering with Yes or No? Then we could shift the probabilities for prerequisites and ancestors in the formula, which might eventually give an edge to Atom D, who knows? There’s plenty more that we optimized on our platform in order to ask as little questions as we can. What exactly? We think at least a little mystery should remain for the moment! 🤫

But wait! Where do the prerequisite relations actually come from?

Let’s solve at least a part of that mystery. The easiest and most accurate, but admittedly time-consuming way is to let experts model the prerequisite relations. We’ve got a whole team for this at edyoucated, working with experts in the respective topics, modeling prerequisites and other relations between atoms to save you time in your assessment process.

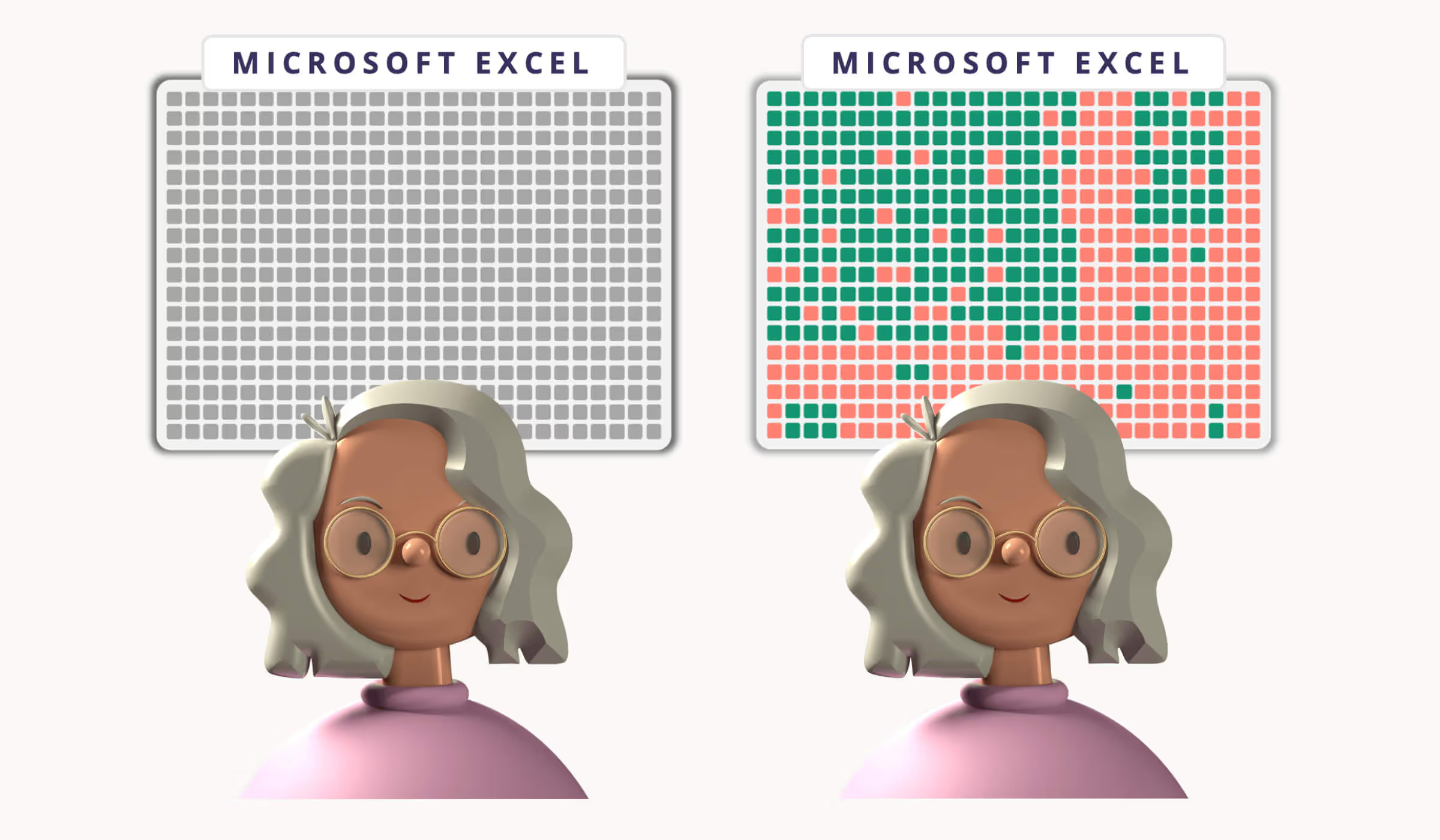

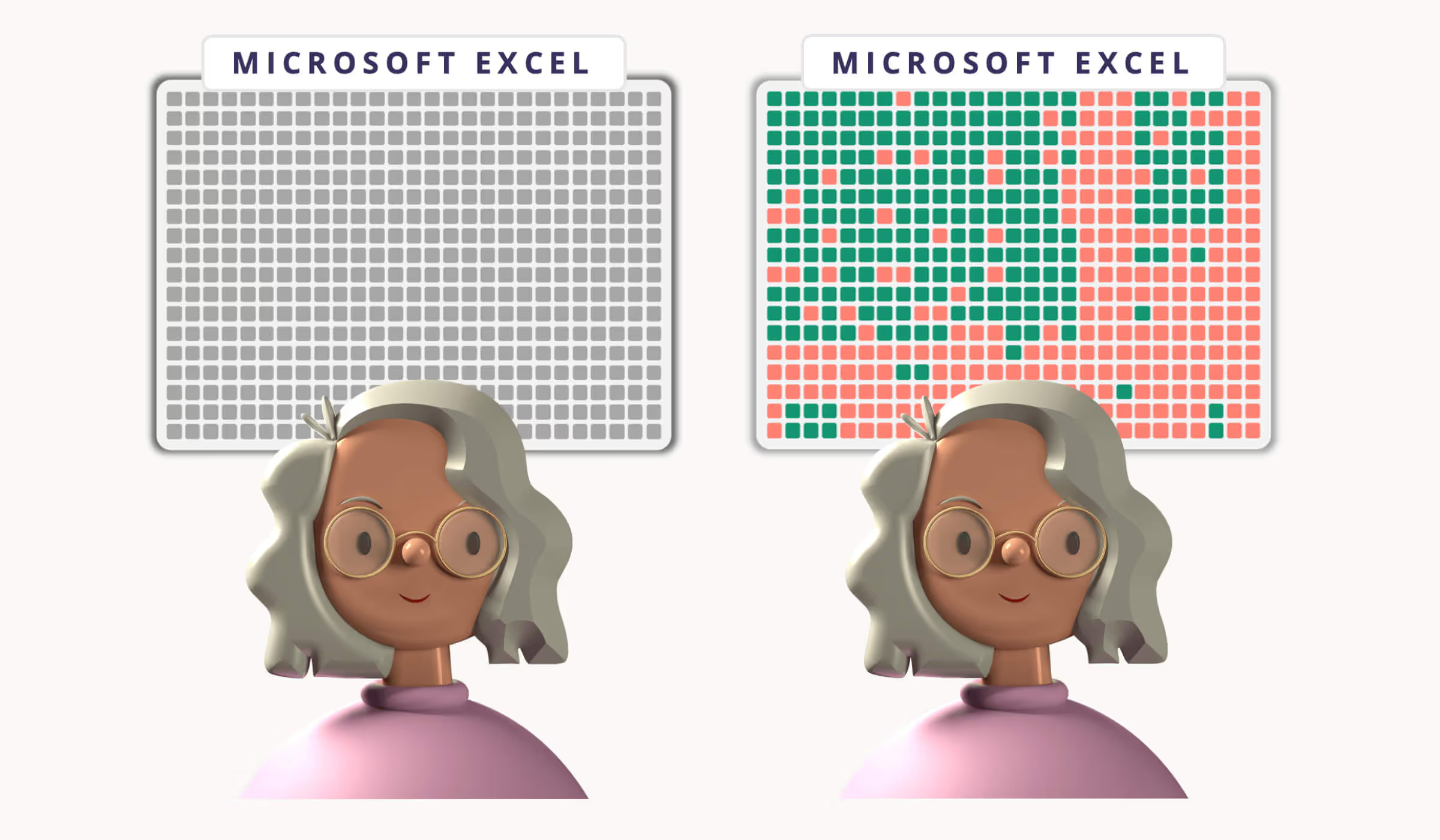

But we’ve promised some data science here, so let’s show a simple way to learn these relations from data. The following image shows the assessment results of a few of our learners for the topic of Microsoft Excel. As you can see there are quite a number of learners with no previous expertise in the topic (all-grey columns), but also many learners with more refined knowledge states.

This is the kind of data we need in order to learn prerequisites.

As a start, let us quickly re-interpret the idea of a prerequisite. What does it actually mean that A is a prerequisite for B? It means that if you know B, then you definitely know A. Or, if you know B, then the probability of knowing A is 100%. Let’s write this properly and maybe give it a little more freedom:

The conditional probability of knowing A given B is at least 95%, sounds correct, right? Even if you’re not a mathematician, I hope you can acknowledge the beauty of formulas. They just make everything that much more concise! The other way around works as well:

tells us that it is very likely that you do not know B given you do not know A (the little c denotes the complementary event).

And these two conditions actually give us a way to learn the prerequisites from the data above. For each atom, we simply need to find all learners that have marked B as known and, among these, compute the ratio that also marked A as known. This is a nice estimate for the conditional probability above, and we can, of course, compute the other one the same way.

Once we have found two atoms fulfilling both conditions, we found a prerequisite relation!

Neat, isn’t it? Adjusting the threshold (currently 0.95) lets us be a little more or less restrictive and helps us find more or less relations, depending on our goal here (if we set it to 1, then both conditions are actually equivalent, so we only need to check one). We’ve run this for you in Python using networkx and pyvis to give a small glimpse of how this looks like below:

We have been a little more restrictive on the threshold here and added some post-processing of the graph to make the results more visible. As you can see, “Mathematical Operators” are a prerequisite for many other atoms, or the “Excel Charts Introduction” a prerequisite for more specific chart atoms, other atoms remain unconnected.

Sounds about right, don’t you think?

A small side note, if you find a few connections that are “unintuitive”: We need to keep in mind that we are dealing with empirical probabilities derived from sometimes inconsistent learner answers, so everything has to be taken with a little care. In practice the whole thing has a lot more complications to it, but let those be our problem. For today it’s just about the idea.

There’s one last thing to tackle in this post. What if we make some mistakes, that is, model or learn a wrong prerequisite relation? In this case the predictions for your knowledge state might be wrong, too.

Well, of course, we make sure to test all our algorithms so that they make as few mistakes as possible. But we all know how data can be messy, noisy and flawed sometimes, so there will inevitably be some mistakes we make. That’s why we always let you as a learner verify our predictions at the very end. Just to make sure you never miss out on anything you can still learn. Speaking of learning, why don’t you try it out for yourself and learn something new along the way?

.avif)

If you want to read some loosely related work, here you go:

Clair (2015) R. S. Clair, L. R. Winer, A. Finkelstein, A. Fuentes-Steeves, and S. Wald. Big hat and no cattle? The implications of MOOCs for the adult learning landscape. Canadian journal for the study of adult education, 27:65–82, 2015.

Daradoumis (2013) T. Daradoumis, R. Bassi, F. Xhafa, and S. Caballé. A review on massive e-learning (MOOC) design, delivery and assessment. In 2013 Eighth International Conference on P2P, Parallel, Grid, Cloud and Internet Computing, pages 208–213, 2013.

Gasevic (2016) D. Gasevic, S. Dawson, T. Rogers. Learning analytics should not promote one sizefits all: The effects of instructional conditions in predicting academic success. The Internet andHigher Education, 28:68–84, 2016.

McBride (2004) B. G. McBride. Data-driven instructional methods: ’one strategy fits all’ doesn’t work in real classrooms. T.H.E. Journal Technological Horizons in Education, 31:38, 2004.

Murray (2004) R. Murray, M. Shea, B. Shea, and R. Harlin. Issues in education: Avoiding the one-size-fits-all curriculum: Textsets, inquiry, and differentiating instruction. Childhood Education, 81(1):33–35,2004.

Rhode (2017) Rhode, J., Richter, S. & Miller, T. Designing Personalized Online Teaching Professional Development through Self-Assessment. TechTrends 61, 444–451 (2017).

Saadatdoost (2015) R. Saadatdoost, A. T. H. Sim, H. Jafarkarimi, and J. M. Hee. Exploring MOOC from education and information systems perspectives: a short literature review. Educational Review, 67(4):505–518, 2015.

Silver (2008) Silver, I., Campbell, C., Marlow, B. and Sargeant, J. (2008), Self-assessment and continuing professional development: The Canadian perspective. J. Contin. Educ. Health Prof., 28: 25-31.

Askar, P., & Altun, A. (2009). CogSkillnet: An ontology-based representation of cognitive skills. Link

Doignon, J.-P., & Falmagne, J.-C. (2012). Knowledge spaces. Springer Science & Business Media. Link

Falmagne, J.-C., & Doignon, J.-P. (2011). Knowledge Structures and Learning Spaces. In Learning Spaces: Interdisciplinary Applied Mathematics (S. 23–41). Berlin, Heidelberg: Springer Berlin Heidelberg. Link

McGaghie, W. C., Adler, M., & Salzman, D. H. (2015). Mastery learning. William C. McGaghie Jeffrey H. Barsuk, 71. Link

Reich, J. R., Brockhausen, P., Lau, T., & Reimer, U. (2002). Ontology-based skills management: goals, opportunities and challenges. J. UCS, 8, 506–515. Link

West, M., Herman, G. L., & Zilles, C. (2015). Prairielearn: Mastery-based online problem solving with adaptive scoring and recommendations driven by machine learning. age, 26, 1. Link

edyoucated is funded by leading research institutions such as the Federal Ministry of Education and Research (BMBF), the Federal Institute for Vocational Education and Training (BIBB), Federal Ministry for Economic Affairs and Climate Action (BMWK).